Lessons from ‘Dragonfly Thinking’ – an AI co-creation experiment

Despite their massive potential, AI-based improvements to policymaking are often hard to implement. But by embracing AI co-creation, it’s possible to move on from the bickering of optimists and skeptics and into a new paradigm, ANU experts Anthea Roberts and Miranda Forsyth argue.

Read time: 5 mins

By Anthea Roberts and Miranda Forsyth, Professors at ANU Regnet (School of Regulation and Global Governance).

As advocates of structured thinking, we’ve been excited to put ‘generative AI’ on our academic dance card.

When developing techniques to help policymakers navigate complex issues – be it implementing reforms or achieving sustainability – we found that, while effective, the techniques were often nearly as difficult as the problems they were designed to solve.

Policymakers liked them but struggled to apply them in practice. We wondered: Could AI be the missing piece of the puzzle?

This is what started Dragonfly Thinking – an ANU spin out that has created a series of AI tools to help break down complex issues and inform better decisions in policymaking, just as dragonflies create a coherent vision and foresight using the thousands of lenses in their eyes.

People could bring their problems to the AI, and, like a tireless research assistant, it would churn out a first draft to help them think about tough problems and avoid being blindsided, potentially gaining insights they might’ve missed on their own. Then they could refine and iterate.

In the early days, we envisioned a seamless marriage between human insight and artificial intelligence. Then came a reality check – just how differently people use AI.

Two distinct camps emerged. There were ‘AI optimists’, flushed with excitement and sci-fi dreams, who seemed to expect a machine that would solve all problems, instantly and flawlessly.

And then there were ‘AI skeptics’, who, with arms crossed, questioned not its utility but its ethics. Would it introduce biases? Could it be trusted when its reasoning processes weren’t transparent?

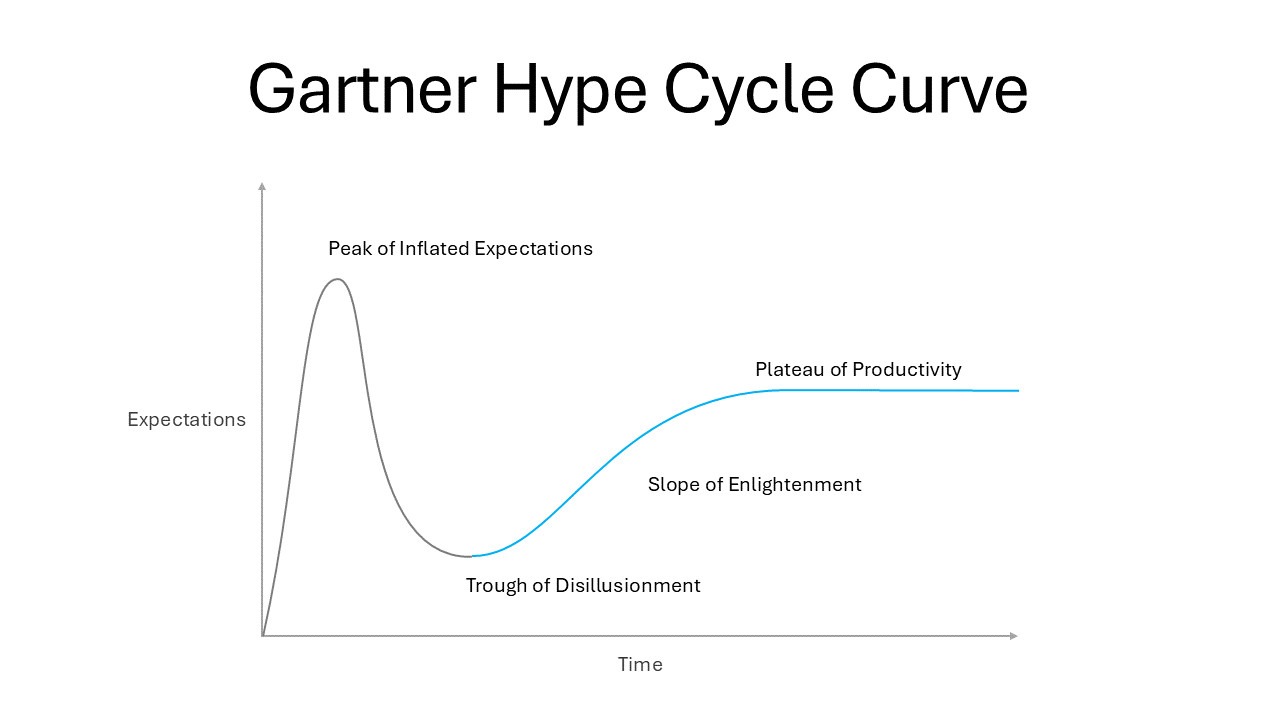

Everyone we encountered was stuck on the same rollercoaster. They were riding what’s called the Gartner Hype Cycle Curve, just at different points along the track.

This curve, ubiquitous in the tech world, describes the life of any new technology. People quickly climb to the ‘Peak of Inflated Expectations’, where fantasies reign supreme, only to plunge into the ‘Trough of Disillusionment’ when the technology inevitably falls short.

But direct your eyes to the next part of the curve: the ‘Slope of Enlightenment’. It carries its riders to the ‘Plateau of Productivity’. It’s here, between the highs and lows, that the real work of learning to dance with AI begins.

How, we wondered, do we get people to move up the slope?

By moving from seeing AI not as a magical oracle or dystopian threat, but as a collaborative partner. By shifting people’s mindsets so they stop expecting perfection and work with AI as they might a co-author, using negotiation and critique to build something together.

Anyone who’s written with a partner knows the delicate choreography of collaboration. Drafts evolve through revision, creating moments of brilliance and frustration alike. Most people don’t even think to criticise a co-author for submitting a draft that is less than perfect.

Instead, they compromise, collaborate, discuss, refine, and produce something better than either creator could have made alone. The same holds true for using AI.

One striking finding of Dragonfly Thinking has been how using AI changes a human’s role in the creative process. People become directors, responsible for explaining the task to the AI in the most detailed terms possible. More than content generators, users then become editors. They finesse and interrogate the results to meet human standards, making them an author, educator, and mentors in new ways.

The experience drew out some critical questions about the future of human-AI collaboration worth considering for policymakers.

What will the ‘Slope of Enlightenment’ look like for a government? When the leap is made from merely using of AI to truly co-creating, what else changes? And how does this affect the nature of work?

And what about the ‘Plateau of Productivity’? Is human and AI co-creation better than the world of human decision-making alone, or, conversely, the world of leaving it solely to AI? When the strength of human judgment and intuition combine with AI’s powerful analysis, could we achieve more together than either could have alone?

These are the questions that guide us these days as we learn to dance with the dragonfly, co-creating with AI as a collaborator.